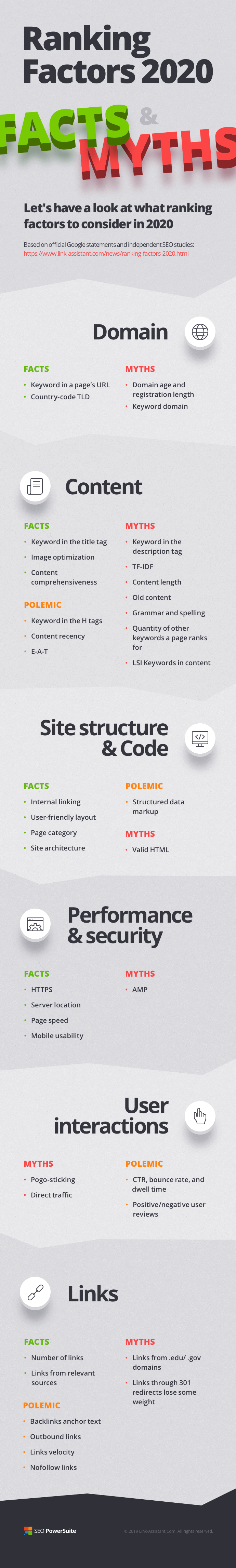

It seems a little while ago that Google hinted at having 200+ ranking factors. Though in fact, it happened in the year of 2009, and we are now in 2024, more than a decade behind.

Google has drastically evolved over the past ten years. Today, neural matching — an AI-based method — processes about 30% of all searches, and Google can recognize concepts behind keywords. They have introduced RankBrain, mobile-first indexing, and HTTPS. As we need to adapt to changes and find ways to get atop of SERPs, the topic of ranking factors remains as fresh as ever.

So let's have a look at what ranking factors to consider in 2024, and what ranking myths to leave behind.

Domain Factors

Dr

Domain age and registration length.

Domain age has been considered a ranking factor for quite a long time. This consideration was making webmasters hunt for old domains in the hope to benefit from their weight. Moreover, Domain names with a lengthier period of registrations were believed to look more legitimate in Google's eyes. This belief probably emerged thanks to one of the

old Google patents saying, in particular, that:

"Valuable (legitimate) domains are often paid for several years in advance, while doorway (illegitimate) domains rarely are used for more than a year."

Google, however, has been denying that domain age has any impact on ranking. Back in 2010,

Matt Cutts clearly declared that there was no difference for Google "

between a domain that's six months old vs one-year-old". John Muller was even more precise, saying an implicit "no" to both,

domain age and

registration length, being ranking factors.

To sum up

There are many examples of new domains used for high-quality and valuable sites and plenty of old domains used for spamming. Domain age and registration length exclusively are unlikely to seriously impact rankings. Probably, older domains may benefit from other factors, such as backlinks, etc. However, if a domain had a bad history of rank drops or spam penalties, Google might negate the backlinks pointing to it. And in some cases, a domain penalty might be passed on to a new owner.

Keyword domains — are domains that either contain keywords in their names or totally consist of keywords (the so-called exact match domains (EMD)). They are believed to rank better and faster (than non-keyword-based domains), as their names serve as a relevance signal. In one of his

video answers in 2011, Matt Cutts indirectly confirmed taking into account keywords in domain names. He recalled users that had been complaining about Google "

giving a little too much weight for keywords in domains".

"…just because keywords are in a domain name doesn't mean that it'll automatically rank for those keywords. And that's something that's been the case for a really, really long time."

"...it's kind of normal that they would rank for those keywords and that they happen to have them in their domain name is kind of unrelated to their current ranking."

Moreover, as quite a big number of EMDs were caught using spammy practices, Google

rolled out the EMD algorithm that closed the ranking door for low-quality EMDs.

To sum up

So at the end of the day, having a keyword as a domain name has little to do with a ranking boost today. There are plenty of hyper-successful businesses that have branded, non-keyword-based domains. Everyone knows Amazon and its brand protection, for example, but not something like buythingsonline.com Think about Facebook, Twitter, TechCrunch, etc. Quality and user value are much more important.

Ku

Keyword in a page's URL

Using a target keyword as a part of the page's URL can

act as a relevancy ranking signal. Moreover, such URLs may serve as their own anchor texts when shared as-is. However, the ranking impact is rather small.

"I believe that is a very small ranking factor. So it is not something I'd really try to force. And it is not something I'd say it is even worth your effort to restructure your site just so you can get keywords in your URL."

To sum up

URLs containing keywords get highlighted in search results, which might impact a site's CTR, as they hint to users at the page content. Google sees such URLs as slight ranking signals. Taking into account their ability to serve as their own anchors, they are probably worth the effort.

A country-code top-level domain (ccTLD) is a domain with an extension pointing at the website's relation to a certain country (for example, .it for Italy or .fr for France, .ca for Canada, etc.). It is believed that having a ccTLD helps you rank better in the target region.

"...are a strong signal to both users and search engines that your site is explicitly intended for a certain country ".

At the same time, having a country-code TLD

may decrease your chances for higher ranks globally.

"You can target your website or parts of it to users in a single specific country speaking a specific language. This can improve your page rankings in the target country but at the expense of results in other locales/languages."

To sum up

A country-code top-level domain is a definite search ranking factor for a country-specific search. Thus, if your business targets a certain country's market, something you really need to distinguish to Google is that your content is relevant to users from that specific country.

Content Factors

Kt

Keyword in the title tag

The title tag tells search engines about the topic of a particular page. Moreover, search engines use it to form a search result snippet visible to users. It acts as a ranking factor that signals about page's relevance to a search query. However, today it's less important than it used to be (according to a

recent correlation study).

"We found a very small relationship between title tag keyword optimization and ranking. This correlation was significantly smaller than we expected, which may reflect Google's move to Semantic Search."

Back in 2016, John Mueller

claimed that title tags remained an important ranking factor, but not a critical one.

"Titles are important! They are important for SEO. They are used as a ranking factor. Of course, they are definitely used as a ranking factor but it is not something where I'd say the time you spend on tweaking the title is really the best use of your time."

"So the second biggest thing is to make sure that you have meta tags that describe your content."

"And have page titles that are specific to the page that you are serving. So don't have a title for everything. The same title is bad."

To sum up

Though there is some evidence that keyword-based title tags might play a less significant role in ranking, Google keeps highlighting their importance as a strong relevance factor. To make the most of title tags, make sure they are unique and specific to pages' topics and use keywords reasonably. And certainly, it's better to avoid meta title duplication across a website.

Dt

Keyword in the description tag

The description tag used to be a ranking signal long ago. Though today it is no longer a ranking factor, it's important for SEO, as

it can affect CTR.

"But, as an indirect signal, there is anecdotal evidence that indirect attributes of better descriptions do help. Things like click-through rate (CTR), perception of the quality of the result, and perception of what your website offers all change when you optimize the meta description correctly."

Google officially stopped using the description tag in their search algorithm in 2009 (together with the keyword tag). However, it still

uses it at large to form snippets in SERPs.

"Even though we sometimes use the description meta tag for the snippets we show, we still don't use the description meta tag in our ranking."

To sum up

The description tag still plays a significant role in SEO, even not being a ranking factor. Google takes it to create search snippets, so descriptions may serve as advertising, allowing users to see what your page is about right from the SERP. Creating the descriptions that are most relevant to your page's content may help you catch users' attention and improve your CTR. Thus, the time you spend working on descriptions is likely to pay off.

The H1 tag (also referred to as the heading) is often called the second title tag, as it usually contains a short phrase describing what the page is about. A piece of text wrapped in <H1></H1> is the most prominently visible piece. Using the H1 tag more than one time across a page is strongly inadvisable. Other H tags, such as H2 to H6, help to make content more readable and give search engines an idea of the page's structure.

From one of the

Google Webmasters hangouts with John Mueller in October 2015, we can get that H tags bring little to no ranking bonuses.

"...we do use it to understand the context of a page better, to understand the structure of the text on a page, but it's not the case like you'd automatically rank 1 or 3 steps higher just by using a heading."

As for how many H1 tags should be used on a single page, the same John Mueller simply said —

"As many as you want".

To sum up

Though there's no clear confirmation that using keywords in H tags may result in a ranking boost, they do help search engines get a better idea of the page's context and structure. Moreover, a text in the H1 heading is considered the most significant piece of your content. Thus placing your target keywords there looks like a good idea (if you do it reasonably).

Images can signal search engines about their relevance through alt text, file name, title, description, etc. They are important ranking factors, especially for image search.

"Alt text is extremely helpful for Google Images — if you want your images to rank there."

"By adding more context around images, results can become much more useful, which can lead to higher quality traffic to your site. You can aid in the discovery process by making sure that your images and your site are optimized for Google Images."

To sum up

Images are an important part of a website's content. They make it more appealing, easier to read and sometimes provide more value than textual content. Optimizing images properly make them appear in image search. And the more relevant they are to the context that surrounds them, the more likely they are to be placed higher in search results. Thus, image attributes all together, such as alt text, titles, etc. are most likely to be significant ranking factors.

TF-IDF stands for term frequency-inverse document frequency. It's an information retrieval method, and is believed to be a method Google uses to evaluate content relevance. It's also considered an

important factor for SEO and rankings , as an instrument of finding more relevant keywords.

"This is the idea of the famous TF-IDF, long used to index web pages.

...we are beginning to think in terms of entities and relations rather than keywords."

John Mueller has recently

dwelled upon it , saying that Google has been using a huge amount of different other techniques and metrics to define relevance.

"With regards to trying to understand which are the relevant words on a page, we use a ton of different techniques from information retrieval. And there's tons of these metrics that have come out over the years."

To sum up

TF-IDF is quite an old technique that's been widely used for information retrieval in different fields, search engines included. By now, however, more modern and sophisticated methods have come to action. Though it doesn't work as a ranking factor, TF-IDF may do good differently. Some case studies are displaying the correlation between content optimization with TF-IDF and rank improvement. So, it may turn quite beneficial to use TF-IDF to enrich your content with more topically relevant keywords. Together with other factors, this may help improve your ranks.

On one hand, there are

industry studies that show a correlation between ranking and content length. It's been observed that long-form content (about 2000-3000 words in length) tend to rank higher and get more traffic than shorter copies. On the other hand, there are

studies that didn't find such correlations.

Here's what John Mueller has recently claimed in one of the

Reddit threads, regarding word count.

"Word count is not a ranking factor. Save yourself the trouble."

Pretty clear, isn't it?

To sum up

Long-form content is more likely to allow for covering a topic more in-depth, provide more value, and answer user intent effectively. These factors all together positively affect rankings, while the length of the page's content can't exclusively result in higher ranks.

Cc

Content comprehensiveness

Content comprehensiveness stands for how fully a page's content covers this or that topic. In-depth content is believed to signal content quality, prove the website's expertise and grant it with more trust from users. Case studies prove that sometimes

in-depth content correlates with rank improvements.

"Creating high quality content takes a significant amount of at least one of the following: time, effort, expertise, and talent/skill. Content should be factually accurate, clearly written, and comprehensive."

"Creating compelling and useful content will likely influence your website more than any of the other factors discussed here. Users know good content when they see it and will likely want to direct other users to it."

Moreover, today Google favors not only accurate and in-depth content but also

useful.

To sum up

High-quality content is comprehensive, accurate, and brings value to users. Avoid creating content for the sake of creation or mere promotion of your business or services. On the contrary, quality content should have a purpose, answer users' search intent, and provide the best solution to their needs.

After the Caffeine update, Google tends to give a ranking boost to fresher, recently created or updated content.

The Caffeine update was aimed at giving people more live search results, i.e. to let them find new relevant information faster. The

update was made to Google's indexing system, making new info and updates appear faster in search.

"Caffeine provides 50 percent fresher results for web searches than our last index, and it's the largest collection of web content we've offered. "

Though, Google's John Mueller has recently

denied giving better ranking opportunity for more frequently updated websites, there are exceptions from this 'rule'.

At the same time, there is

Quality Deserves Freshness — a part of the ranking algorithm that favors websites that provide the most recent information in certain search areas (such as news, trends, politics, events, etc.).

To sum up

In general, the answer to whether fresher content impacts rankings will be — it depends. In the areas where users are likely to look for the most recent information, search results are being processed by the Quality Deserve Freshness part of the ranking algorithm for which recency is a ranking factor. For other results, Caffeine comes to action, rapidly indexing the latest updates with no significant impact on rankings.

There's been some evidence that old, not updated content still might outrank fresher pages. Thus the age of a page might be taken into account by the ranking algorithm.

Discussing one of such cases, John Mueller supposed that content that hadn't been long updated but remained relevant and referred to could keep good positions in SERPs, even though more fresh content appeared.

"It can really be the case that sometimes we just have content that looks to us like it remains to be relevant. And sometimes this content is relevant for a longer time.

I think it's tricky when things have actually moved on, and these pages just have built up so much kind of trust and links and all kinds of other signals over the years... well, it seems like a good reference page."

To sum up

There's no confirmation from Google that old content tends to rank better. Such cases happen, but their success is more likely to be connected with other factors, for example, quality, relevance, and a substantial amount of reference to those pages. Thus, it's not the age that matters, but the quality of content and the value it brings to users.

The majority of the top-ranking pages have little to no grammatical and spelling mistakes. So, grammar is affecting content quality and thus, it is considered to be an indirect ranking factor.

Matt Cutts

admitted a correlation between the quality of a website and the grammatical correctness of its content back in 2011, hinting at grammar and spelling to affect rankings indirectly.

"...I think it would be fair to think about using it as a signal.

We noticed a while ago, that if you look at… how reputable we think a page or a site is, the ability to spell correlates relatively well with that."

It's always good to fix known issues with a site, but Google's not going to count your typos.

To sum up

Although Google doesn't count your typos and says it doesn't impact rankings, poor grammar looks unprofessional (especially for such niches as banking, law, medical services) and can leave users less satisfied with your site's content.

Qk

Quantity of other keywords a page ranks for

It's believed that the number of other keywords a page ranks for, apart from the target query, may be considered a quality ranking factor.

"When a user submits a search query to a search engine, the terms of the submitted query can be evaluated and matched to terms of the stored augmentation queries to select one or more similar augmentation queries. The selected augmentation queries, in turn, can be used by the search engine to augment the search operation, thereby obtaining better search results."

Which means that if algorithm chooses your page as the best fit for a certain query, it may also choose it as a result for other relevant queries.

To sum up

Thus, merely the number of ranking keywords is unlikely to be a ranking factor. However, if the patented technology has been implemented into the search algorithm, it's highly probable that ranking for one query may simplify for the page ranking for more relevant queries with much less effort.

Since the appearance of Google Quality Raters Guidelines, a website's Expertise, Authoritativeness and Trustworthiness (E-A-T) have become the terms connected to website quality. Many voices claim that it's

become a strong signal that Google uses to evaluate websites and rank them.

Thus it's believed that providing additional information, for example, proper NAP, content authors' names and bio, may improve rankings.

John Mueller gave a

comprehensive explanation about what Quality Raters Guidelines were meant for, as well as whether E-A-T may be referred to as a ranking signal.

"A lot of this comes from the Google Raters Guidelines which are not direct search results or search ranking factors.

But rather this is what we give folks when they evaluate the quality of our search results. "

To sum up

E-A-T today is a word many people like to repeat regarding website quality and thus, ranking impact. Quality Raters Guidelines were created for Google employees whose task was to look through search results and make sure they are relevant and of high quality. It contains recommendations on what to consider quality results, etc. True, that for some types of websites, like 'Your Money Your Life' for example, it's critical to provide expert information and prove their expertise providing the author's bio, etc. However, calling E-A-T a ranking factor seems to be a bit premature.

Lk

LSI Keywords in content

LSI stands for latent semantic indexing. It's an information retrieval method that finds hidden relationships between words and thus, provides more accurate information retrieval. There's an opinion that it helps Google to better understand the content of a website. The semantically related words that help the search engine recognize the meaning of words that may have different meanings are often called 'LSI keywords', and they are believed to impact rankings.

"There's no such thing as LSI keywords — anyone who's telling you otherwise is mistaken, sorry."

To sum up

LSI keywords are unlikely to have any impact on rankings. Latent semantic indexing is an old information retrieval method that modern search algorithms, probably don't even use, relying on much more sophisticated methods.

Site structure & Code Factors

Internal links allow for crawling and indexing a website's content. They also help search engines distinguish the most important pages (relative to other pages), and their anchor text acts as a strong relevance signal.

Touching on the

internal linking subject during a Hangouts session, John Mueller claimed the following:

"You mentioned internal linking, that's really important. The context we pick up from internal linking is really important to us… with that kind of the anchor text, that text around the links that you're giving to those blog posts within your content. That's really important to us."

To sum up

Google crawls websites following their internal links, thus getting the idea of the overall site structure. Internal links carry the weight from and to pages, so the page that gets many links from other site pages looks more prominent in Google's eyes. Moreover, internal links' anchor text is likely to serve as a strong relevance signal. Thus, it's critical to provide links to the most valuable content, and ensure to have no broken links on your website. It's also wise to use keyword-based anchors.

It's critical that your page layout makes your content immediately visible to users. Especially after the rollout of the 'Page Layout algorithm' that tends to penalize sites with abusive and distracting ads and interstitial pop ups.

Introducing the 'Page Layout' algorithm improvement back in 2012,

Matt Cutts wrote:

"So sites that don't have much content "above-the-fold" can be affected by this change. If you click on a website and the part of the website you see first either doesn't have a lot of visible content above-the-fold or dedicates a large fraction of the site's initial screen real estate to ads, that's not a very good user experience. Such sites may not rank as highly going forward."

Later on, Gary Illyes confirmed the importance of the algorithm, saying "Yes, It is still important"

on Twitter.

To sum up

User-friendly layout is likely to play in favor of a website. If visitors can easily reach a page's content (without the need to scrape through giant ad blocks) it is considered to be a good user experience. Moreover, it's clear that Google intends to penalize all sorts of distracting advertising.

It's believed that a page that appears in a relevant category might get some ranking boost.

An existing Google's

patent on category matching describes a method that matches the search terms to page's content, as well as page's category. It determines the category relevance score that may affect rankings:

"...A search criteria-categories score is determined indicating a quality of match between the search criteria and the categories. An overall score is determined based on the text match score and the category match score."

To sum up

Google is likely to use category matching as a ranking signal (but at the same time, it uses other approaches that allow it to provide better results). Besides, a hierarchical architecture that includes categories helps Google to understand pages' content better, as well as the overall site structure.

A poor website architecture that prevents search engine bots from crawling all the necessary pages of your website may hamper the visibility of the website in search results. Besides, it confuses your visitors.

John Mueller

highlighted the importance of well-elaborated site architecture saying:

"In general I'd be careful to avoid setting up a situation where normal website navigation doesn't work. So we should be able to crawl from one URL to any other URL on your website just by following the links on the page."

"What does matter for us a little bit is how easy it is to actually find the content.

So it's more a matter of how many links you have to click through to actually get to that content rather than what the URL structure itself looks like."

To sum up

A well-elaborated site architecture allows for better crawlability and provides a convenient way for users to access the entire website content. Content that is hard to find is a sign of poor user experience, and it is not likely to rank well.

HTML errors may signal a site's poor quality, and thus lower its rankings. And on the contrary, valid HTML helps rank better.

"Although we do recommend using valid HTML, it's not likely to be a factor in how Google crawls and indexes your site."

"As long as it can be rendered & SD extracted: validation pretty much doesn't matter."

In the same thread, he was also denying that HTML may have any impact on rankings today.

To sum up

Though HTML can't be named a ranking factor, and Google encourages not to fret a lot about it, valid HTML has some virtues you can't ignore. It positively affects the crawl rate and browser compatibility, as well as improves user experience.

Sd

Structured data markup

Structured data markup (

schema.org) helps search engines better identify the meaning of a certain piece of content (for example, event, organization, product, etc.). Using structured data on a website may result in having a featured snippet in search results. It is also considered to affect rankings.

"There's no generic ranking boost for SD usage. That's the same as far as I remember. However, SD can make it easier to understand what the page is about, which can make it easier to show where it's relevant (improves targeting, maybe ranking for the right terms). (not new, imo)"

To sum up

Sounds like structured data is not a ranking factor, but it helps ranking a website after all. Structured data acts indirectly, helping search engines better understand, and thus assess your content. Plus, it may improve your visibility in the search results, creating a feature snippet or another rich result.

Performance & security Factors

Google tends to rank HTTPS websites better, striving to protect users. Having a valid SSL-certificate helps a website receive some ranking boost.

"...over the past few months, we've been running tests taking into account whether sites use secure, encrypted connections as a signal in our search ranking algorithms. We've seen positive results, so we're starting to use HTTPS as a ranking signal."

"It's important in general, but if you don't do it, it's perfectly fine. If you're in a competitive niche, then it can give you an edge from Google's point of view. With the HTTPS ranking boost, it acts more like a tiebreaker."

To sum up

Security's been one of Google's concerns for quite a long time. So it's not surprising that HTTPS was made a ranking signal. And though it is considered to be not a big ranking game changer, it seems to be better to migrate to HTTPS, than not to migrate. Leaving aside SEO, think about doing it for the sake of users.

A server location may signal search engines your website's relevance to the targeted country and audience.

In

this video, Matt Cutts hints at the probability of better rankings, in case of having your site hosted in the country you target audience lives in.

To sum up

So, just like having country-based top-level domains, a hosting server location is likely to make search engines better understand what audience the site content targets and include it in search results for a certain country. And that's especially important when you target a highly competitive local SERP.

Page speed is considered to be one of the most significant ranking factors, especially for mobile sites.

Site speed has officially been a ranking factor for a long time already. Starting from 2018, it has been

announced a ranking factor for mobile search.

"Although speed has been used in ranking for some time, that signal was focused on desktop searches. Today we're announcing that starting in July 2018, page speed will be a ranking factor for mobile searches."

To sum up

Today, as the majority of searches are made on mobile devices, and users tend to leave a website that takes more than 3 seconds to load, having a faster website is a huge advantage. Moreover, Google's giving more ranking opportunities to faster websites. Thus, it's critical to take the necessary steps to increase your site speed (which may include using a CDN, applying compression, reducing redirects, optimizing images and videos, etc.).

Mobile usability is one of the principal factors for a website to rank in mobile search. Multiple

case studies prove the correlation between mobile usability and rankings

Introducing the increasing number of mobile-friendly results in search, Google has

highlighted the importance of mobile usability for rankings.

"As more people use mobile devices to access the internet, our algorithms have to adapt to these usage patterns.

...we will be expanding our use of mobile-friendliness as a ranking signal. This change will affect mobile searches in all languages worldwide and will have a significant impact in our search results. "

To sum up

Due to the increased number of mobile users, Google's encouraging websites to make their content accessible for mobile users and ensure smooth performance on mobile devices. Think of everything that can improve user experience for mobile searchers — design, layout, functionality, etc.

Accelerated mobile pages (AMP) are the so-called mobile-friendly copies of website pages. In fact, they are lighter HTML pages created to save users' time on loading content. There's been

evidence that AMP pages tend to rank higher. Having AMPs is considered to be a condition for a website to appear in the news carousel.

"So I guess you need to differentiate between AMP pages that are tied in as separate AMP pages for your website and AMP pages that are the canonical version of your site.

...if it's a canonical URL for search, if it's the one that we actually index, then yes we will use that when determining the quality of the site when looking at things overall. "

To sum up

AMPs are a great solution for mobile web — they make websites much lighter and thus, deliver content to users faster (and speed matters greatly for mobile users). Though not a direct ranking signal, AMPs still may impact site quality.

User interactions Factors

Is

CTR, bounce rate, and dwell time

Google is believed to take into account the so-called user interaction signals, such as CTR, dwell time and bounce rate. Based on the time a user spent on a website before bouncing back to the SERP, Google may evaluate the page's quality. Some case studies show a correlation between

bounce rate,

CTR, and time spent on a website with rankings.

"Dwell time, CTR… those are generally made up crap. Search is much more simple than people think."

To sum up

All things user behavior seem to be a highly controversial topic. On the one hand, we have Google multiple times denying their use as ranking factors. On the other hand, there are cases of correlation between them and rankings. Leaving aside rankings, a good CTR is a good CTR, and making people spend more time on your website may lead to more conversions. So you don't need to give up tracking these metrics.

Pogo-sticking is a type of user behavior that occurs when a user 'jumps' across a SERP from one result to another to find the most relevant one. It is believed that pogo-sticking may negatively affect rankings.

John Mueller

explained during a Hangout session that pogo-sticking had not been regarded as a ranking signal.

"We try not to use signals like that when it comes to search. So that's something where there are lots of reasons why users might go back and forth, or look at different things in the search results, or stay just briefly on a page and move back again. I think that's really hard to refine and say "well, we could turn this into a ranking factor.

So I would not worry about things like that."

To sum up

Google seems to treat pogo-sticking as a variation of normal user behavior. As soon as it's hard to define the exact reason for it, pogo-sticking is not likely to become a ranking factor, at least in the near future.

There's been a

confirmation that Google has been using data from Google Chrome to track website visitors. Thus it is believed that it can evaluate website's traffic and favor sites that receive a lot of direct traffic, determine how many people visit site (and how often). Back in 2017, a

correlation between direct traffic and website ranks was observed.

There's a Google's

patent named 'Document scoring based on traffic associated with a document', but there's no clear evidence that is has been used.

Moreover, Google

denies any connections between traffic and rankings.

"No, traffic to a website isn't a ranking factor."

To sum up

Though Google might use the Chrome data to evaluate website relevance or quality, there hasn't been any clear confirmation from Google that direct traffic may play any significant role in providing higher ranks for a website.

Ur

Positive/negative user reviews

Positive or negative customer review sources may be taken into account by Google's ranking algorithm.

On the one hand, there is a

patent on domain-specific sentiment classification:

"A domain-specific sentiment classifier that can be used to score the polarity and magnitude of sentiment expressed by domain-specific documents is created."

However, the technology is not likely to have been used, as John Muller

denied considering ratings, reviews etc, to have ever been ranking factors.

To sum up

Google is probably on the way to include positive or negative sentiments into their ranking algorithm. User reviews do play an important role for ranking in local search. However, speaking about organic search rankings, Google seems to haven't made such a step yet.

Links Factors

Google's ranking algorithm takes into account the number of domains and pages that link to a website. Various studies show strong

correlation between the number of backlinks and rankings.

"...counting citations or backlinks to a given page… gives some approximation of a page's importance or quality. PageRank extends this idea by not counting links from all pages equally, and by normalizing by the number of links on a page."

To sum up

Definitely, backlinks are one of the most important ranking factors and the number of backlinks matters. However, backlinks are not treated equally, and quality mostly trumps quantity. Thus, you need to strive for getting backlinks from quality an authoritative websites, rather than chasing big numbers.

Rl

Links from relevant sources

Backlinks from the sources relevant to the referred page are considered to be strong ranking signals.

"We continued to protect the value of authoritative and relevant links as an important ranking signal for search."

"As many of you know, relevant, quality inbound links can affect your PageRank (one of many factors in our ranking algorithm)."

To sum up

It's quite obvious that Google considers backlinks from topically relevant websites or pages as strong ranking signals. So again, a couple of backlinks from relevant websites (of the same niche) or pages with topically relevant content may do you much more good than a ten of links from less relevant sources.

Anchor texts of backlinks may signalize of a linked page relevance and thus, impact rankings of the page for target keywords.

"The text of links is treated in a special way in our search engine.

...we associate it with the page the link points to. This has several advantages. ...anchors often provide more accurate descriptions of web pages than the pages themselves."

However, Google

warns against unnatural optimized anchors.

"Here are a few common examples of unnatural links that may violate our guidelines:

...Links with optimized anchor text in articles or press releases distributed on other sites. For example: There are many wedding rings on the market. If you want to have a wedding, you will have to pick the best ring."

To sum up

Well, this looks like a delicate topic, because you really need to be cautious with keyword-based anchors. On the one hand, search algorithm takes into account anchor texts. On the other hand, excessive use of keyword-based anchors may look unnatural and, consequently, suspicious and do more harm than good in the final count. So it's highly advisable to use keyword-based anchors reasonably. Think of anchor texts as short descriptions of the content you link to, and avoid stuffing them with target keywords.

Ed

Links from .edu/ .gov domains

Backlinks from .edu and .gov domains are considered to pass more weight to websites they refer to.

Google's Matt Cutts

stated that links form .edu and.gov domains are normally treated just like any other backlinks.

"It's not like a link from an .edu automatically carries more weight, or a link from a gov automatically carries more weight."

"Because of the misconception that .edu links are more valuable, these sites get link-spammed quite a bit, and because of that, we ignore a ton of the links on those sites."

To sum up

Domains like .edu and .gov are at large quality, authoritative websites, so they usually get higher ranks. However, when it comes to passing on their weight, Google tends to treat them equally to other websites, not putting any additional weight on them just for having such domain extensions. Instead of putting efforts into earning a link from a .gov site, for example, it's much wiser to try and earn links from niche relevant sites.

Though outbound links are supposed to leak your pages weight, linking to quality, authoritative resources may work in favor of your rankings.

"Our point of view… links from your site to other people's sites isn't specifically a ranking factor. But it can bring value to your content and that in turn can be relevant for us in search."

Here's how he

answered about the impact of outbound links on SEO in a recent Hangouts session:

"Linking to other websites is a great way to provide value to your users. Often times, links help users to find out more, to check out your sources and to better understand how your content is relevant to the questions that they have."

To sum up

Well, there is neither definite 'yes' nor definite 'no'. It seems that outbound links look fine to Google, as they are, put in the relevant context, helping users to examine the subject they are interested in more comprehensively.

A stable, continuous growth of the number of backlinks over time speaks of a website's increasing (or continuing) popularity, so it may lead to higher positions in search results. If a website is losing more links than acquiring over time, Google demotes its positions.

One of the Google's

patents dwells on scoring websites based on links behavior. Here are some extracts:

"...the link-based factors may relate to the dates that new links appear to a document and that existing links disappear."

"Using the time-varying behavior of links to (and/or from) a document, search engine may score the document accordingly."

To sum up

I think, it is likely that Google determines when websites gain and lose backlinks. By analyzing this data it can make assumptions on their growing or falling popularity and treat the websites accordingly — promote or demote them in the SERPs. So if your page continues to get backlinks over time, it may be considered relevant and 'fresh', and may be eligible to higher ranks.

Lr

Links through 301 redirects lose some weight

Links pointing to a redirected page pass less weight to a destination page than direct links to that page. So, 301 redirects work somehow like a negative ranking factor.

Later on, answering a question about link equity loss from redirect chains, John Mueller

claimed the following:

"For the most part that is not a problem. We can forward PageRank through 301 and 302 redirects. Essentially what happens there is we use these redirects to pick a canonical. By picking a canonical we're concentrating all the signals that go to those URLs to the canonical URL."

To sum up

At the end of the day, there's no difference for Google between a direct link and a link that points to a redirected page. The latter will pass the same weight to the destination page, as if it was a direct link. So it's likely that you don't need to fret too much about 301 redirects. However, too many redirects (and redirect chains) can have a bad impact of website's performance (and performance IS a ranking factor). What's more, the excessive number of redirects is bad for site's crawl budget.

Google's ranking algorithm doesn't take into account links marked with a nofollow attribute. Such links don't carry any weight, thus you can't count on them (in terms of having any ranking impact).

Recently, Google has announced

a new approach to counting nofollow links, saying they would treat them not as directives, but hints.

"When nofollow was introduced, Google would not count any link marked this way as a signal to use within our search algorithms. This has now changed.

All the link attributes — sponsored, UGC and nofollow — are treated as hints about which links to consider or exclude within Search."

"The move to a hint based system may cover every link list to nofollow, including those from publications that blanket nofollow. We don't however have anything to announce at the moment, but I'm sure your sites will appreciate this move on the long run."

To sum up

The nofollow attribute was introduced to fight the spammy links some 15 years ago. It was serving as an instruction for search engine bots not to take this link into account. From now on, it will be up to Google to decide whether to use the link for ranking purposes or not. It's unclear, however, what would influence the decision.

A cheatsheet of Google ranking factors for 2024

Conclusion

To get it right, this is definitely not the exhaustive list of Google's ranking factors. I believe it's kind of impossible to verify all of them. I aimed to examine popular assumptions about ranking-factors-to-be and try and find any proofs or denials from the Google part. I hope you'll find it useful.

Google has greatly evolved over the last ten years. Many things that used to work earlier are not considered as best practices anymore. On the other hand, new factors keep coming to action, and I'm sure we'll see more changes in the upcoming years. We can conclude that today, there is a combination of factors that are more likely to result in good rankings. The old good content (that satisfies user intent) and backlinks from trusted relevant sources powered by a secured and quick-loading website. (Plus, everything else that provides great user experience).

What do you think? Your comments, opinions, and discussions are welcome.